I’ve tried many times to make a web site that did what I wanted it to do. The commercial ones provided a structure that didn’t fit my quirky needs, among which rendering mathematics was high on the list. I tried some non-commerical ones too. Again, the fit was not the right one for me.

The time had come for me to make make a site-making tool, then build my own site with it. My choice for the tool to make the tool was a Haskell project, Hakyll. Although I have done some Haskell programming, I am much, much less proficient and experinced that language than with Elm. Nonetheless, what I read intrigued me. It seemed right, so I plunged ahead, accompanied by my trusted and increasingly able Sancho Panza, Claude Code.

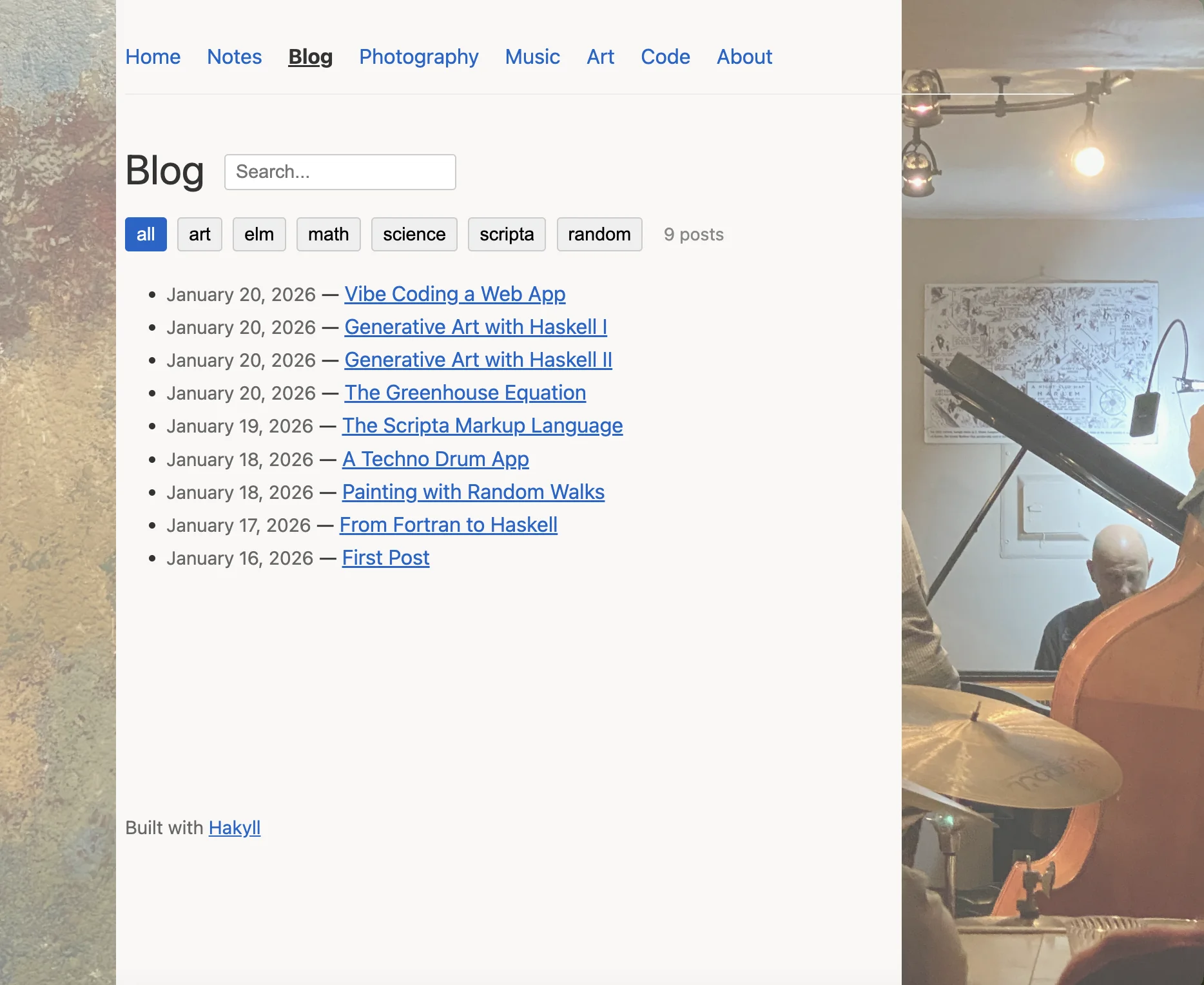

The first commit was on January 14, 2025. It is now January 20. The app does pretty much all I want of it, and I am starting to run low on ideas of what do do next. Here is what the app looks like today:

The Process

Since I had never used Hakyll before, I began to probe the possibilities with a bit of vibe-coding using Claude. I have quite a bit of experience with this AI, and have found that the more specific and limited in scope my requests, the better the results. In fact, the results were stellar, so good that I ended up vibe-coding almost the entire project.

The architecture is quite nerdy. Though I have, for example, a project directory /posts for blog posts, almost all the content I write comes from

The Archive, an app a friend of mine suggested I look at. To make a document show up in the /post route, I simply add the tag #post to the document. If I want to categorize this post as having to do with mathematics, I add the tag #tag:math. This creates a button on the blog page titled math. Clicking on that button shows only blog posts with that tag.

As for content, Markdown works, and so does Scripta, a radically simple markup language that I devised for scripta.io (serviceable, still in alpha!). Rather than writing a parser and renderer for Scripta, I simply taught it what to do. When I needed an element [u BODY] that underlines its body, I just said

Claude: Done. [u BODY] now underlines its body. It supports nesting like the other text elements.

Next was this conversation:

Claude: Done. The indent block is implemented:

| indent

This text is indented by 2em (default)And Claude added a nice feature that I had not mentioned, though I had previously intructed him that blocks can have arguments:

| indent 40

This text is indented by 40pxLinking posts

Suppose that you are in document A and want to make a link to documenb B where document B has a date UUID like 202601211234. It suffices to write 202601211234 in document B. The rendered text in document A will be a link to B with title = title of B.

Documents from Scripta.io

I asked Claude the following

I want now to be able to handle Scripta documents directly imported from Scripta. The first lines of such a document have the form

`| title <TITLE>

In addition, there may be a line that looks like [tags post tag:physics]

Such a line hould be handled as if it were #post #tag:physics

He/it replied:

Claude replied:

I understand. So for should I implement a preprocessor that:

- Detects and extracts the | title header

- Converts [tags …] lines to the #… format

This would allow you to import Scripta documents directly. Would you like me to proceed with this?

I told Claude to proceed. After about 5 minutes, he replied

The site rebuilds successfully. The preprocessScriptaImport function is now integrated into the compilation pipeline.

Here’s what I implemented:

Preprocessor handles:1. | title